The Second Beyond the PDF workshop has finally taken place last week in Amsterdam (fortunately I got travel support from the organizers, so I was able to attend the full event). If I have to pick a word to describe the workshop, it would be “different”. As Paul Groth (one of the chairmans) summarizes in his post, the audience was heterogeneous: there were people from biomedical, humanities, social sciences and physical sciences domains, belonging to different types of organizations (ranging from academics to governmental). Publishers and editorials were also present, and many different tools, visions and ideas were presented to improve the future of scholarship communication. This whole context was a bit different to what one could be used to see in other conferences, where you find people doing similar things to what you do, and you discuss your research rather than the idea of how to communicate it to others. Here people were not afraid to tell publishers and editors why they thought the system was broken, exposing their arguments in a non-formal friendly environment.

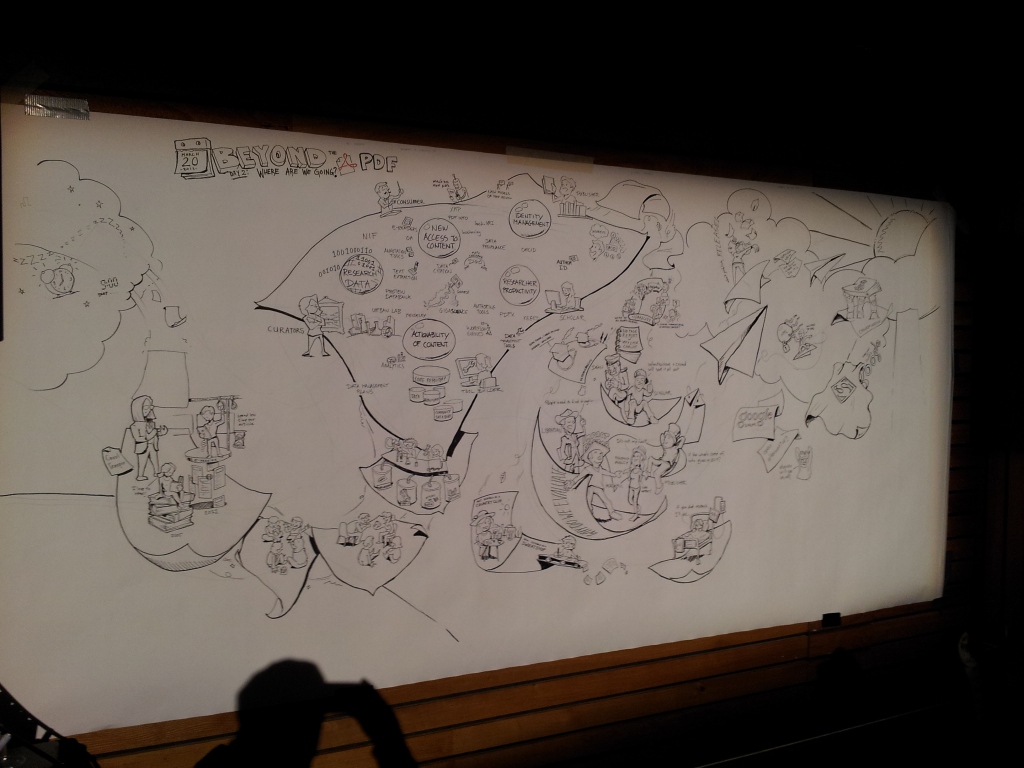

Another interesting fact was the “second screen” showing the twitter wall live. People were very active, highlighting the interesting quotes from the talks and initiating debates in parallel to all the sessions. Even today the tag #btpdf2 is still active. Congrats to all the organizing staff!

Detailed summary and highlights

The program of the workshop is available here. Below you can see the summary and highlights from the different sessions and interesting quotes I wrote down in my notes.

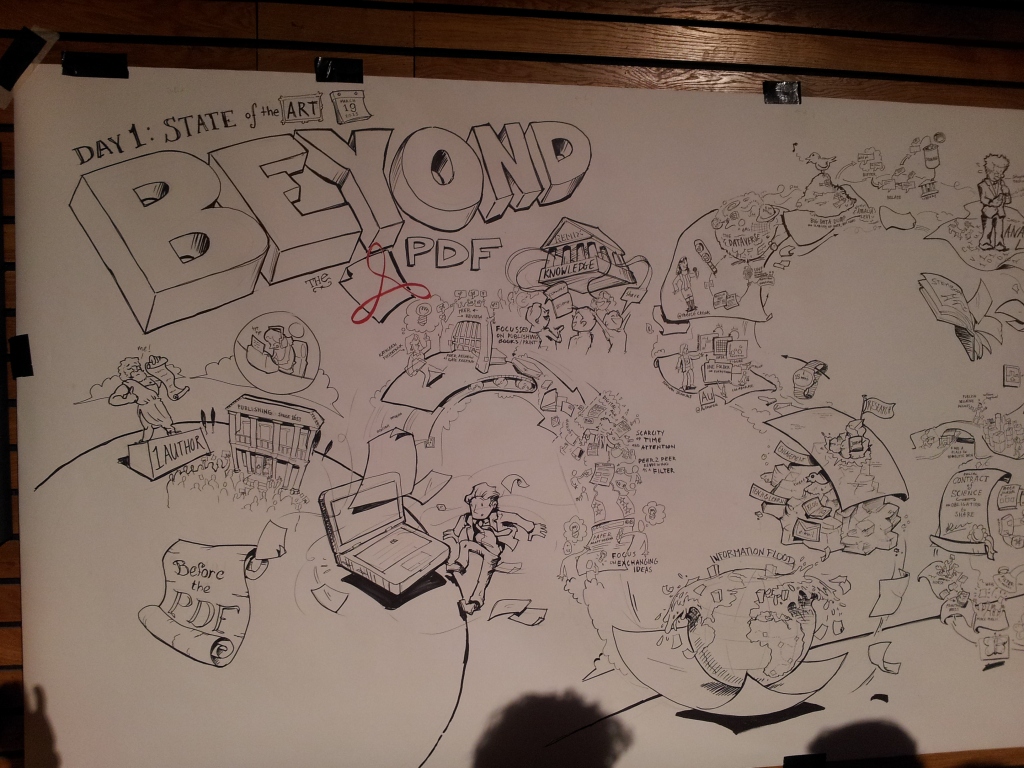

Day 1:

The day started with a Keynote by Kathleen Fitzpatrick, who explained how the book is not dead, although the academic book is kind of dying. The blog could be a replacement, since it is a kind of alternative way to publish the resources. You are able to get comments from the community, feedback suggestions and support. Why couldn’t we be our own publishers?

The current reviewing process has concerns; could it be part of what is broken? Bias and flaws is not unusual, and reviewing requires a great labor for which we normally don’t receive much credit. As an example, she explained how the book she had been writing had more impact in a blog form than in its final published format.

Finally, she remarked how important the online communities are. If you build a tool or a service without a community, people will not just come. You have to build a community first. Some interesting quotes: “Publishers will have to focus more on services and less on selling digital objects”. “We need filters, not gatekeepers” (referring to publishers and editors). “The network is not a threat. It helps to reach more people”

Laura Czerniewicz and Michelle Willmers followed the keynote with a session on context. They highlighted the dangers of a complete open access: will it become a flooding of content? There is a need for a rewarding system. What do authors get from open access? Editors are gatekeepers. Another important factor is that in the end only the Journal articles are considered when judging the validity of a researcher. Tweets, blogs, talks, workshops and conferences are ignored, even when they could have had more impact than the actual journals. In most cases journal articles are the peak of the iceberg.

Next, on the Vision session, Nathan Jenkins introduced Authorea, a very cool tool to build articles online without having to deal with the Latex compilation and built on Ruby on Rails. Mercé Crossas presented Dataverse, a portal for archiving data results for citation purposes, motivated by the volatility of the links in old papers. Amalia S. Levi explained how in historical research a lot of the data already existed, but the links were missing. (This reminds me of some conversations that I’ve had recently about how the papers are cited in the scientific community. It turns out that sometimes this is the case nowadays as well). Joost Kircz hit the spot in his speech (in my opinion): Are we going Beyond the pdf or Beyond the essay? An enhanced pdf is still stuck on the page paradigm. Papers represent structured or randomized knowledge that should be browsed, and that is often not possible in a book. I liked his ending statement: “Publishing is not a science, but is a craft”. Lisa Girard followed with StemBook, a portal where all the authors could keep their findings up to date, allowing the community to review their work in stem cell biology. An interesting thing about it is that people could upload their protocols and annotate them using Domeo, aligned with the Annotation Ontology. Paolo Ciccarese followed providing an overview of that ontology, summarizing their efforts and collaboration in the community in order to come up with a highly adopted standard.

As a small comment to this session, I think it is a bit curious that so many finished (or nearly finished) tools were presented in a “Vision” session. It would have been interesting to see how some of the presenters picture the future of publication and how to get there (either by using some of the presented tools or not).

After lunch there was a session on new models for content dissemination, where Theodora Bloom started stating very clearly what the main current problems are for dissemination:

- Access to what you want to read and use

- Publication venue as a measure of quality.

- Having to repeat the cycle of publication in different journals

- Poor links for underlying data.

She also explained how in Plos One the research leading to negative results is also published, but hardly anyone submits. I really liked this, it reminded me of a quote from Thomas Edison: “I have not failed. I’ve just found 10,000 ways that won’t work”. If an idea looks promising but doesn’t work as expected, it’s important to share it with the community so as to avoid someone else to repeat the same mistake. Who knows, it might even inspire other people to come up with a better solution.

Brian Hole followed talking about metajournals and the social contract of science, combining it in the idea of an Ultrajournal.

The second part of the session was introduced by a lively Jason Priem, who talked about how the printing press had been the first revolution for disseminating content and the Internet the second one. According to him, we should mine the network in order to produce the appropriate filters for the information. Keith Collier followed introducing Rubriq, an independent peer- review system that aims to decouple the peer review from the publication. Next, Kaveh Bazargan showed the current concern about type setters, and how we should get rid of them. Instead, XML or blog post should be the current type setters, giving more freedom to the writer. Finally Peter Bradley talked about Hypothes.is, an open source platform for the evaluation of information, and Alf Eaton introduced PeerJ, an open access peer reviewed journal with metadata for all their papers.

The final session of the day was about the business case, where three representatives explained different business models and three stakeholders plus the audience asked questions about them. Wim van der Stelt argued that in Springer they are not resisting to the change and Mark Hahnel defended the authors to be able to receive credit for their data as it happens in FigShare. The discussion brought some interesting topics to the table, such as that scholarly communication per se is not profitable and we need government funding, how to move from impact factor in journals to one that is meaningful (and convince the government to support it) or how to be able to share our work to those that don’t have the means to afford to pay it. Another important observation is the number of hours spent by researchers in rejected per year, which sums up to 11-16 millions!

The day ended with the session on demos and posters. Marco Roos and Aleix Garrido were by my side talking about the wf4ever project, while I spoke a bit about the work done reproducing the TB-Drugome workflow. The slides can be seen here.

Day 2

Carol Teinoir started the day by trying to analyze and understand the needs of scholars. She gave a lot of metrics about the main reasons for scholars to not share their data (“I have not the time”, or “I’m not required to” were among the top five), and how successful researchers turn up to read more. She also gave metrics on who is sharing data versus who is willing to share their data, and analyzed how the e-books had influenced the printed pdf copies. An interesting fact: in Australia, e-books have almost replaced written copies.

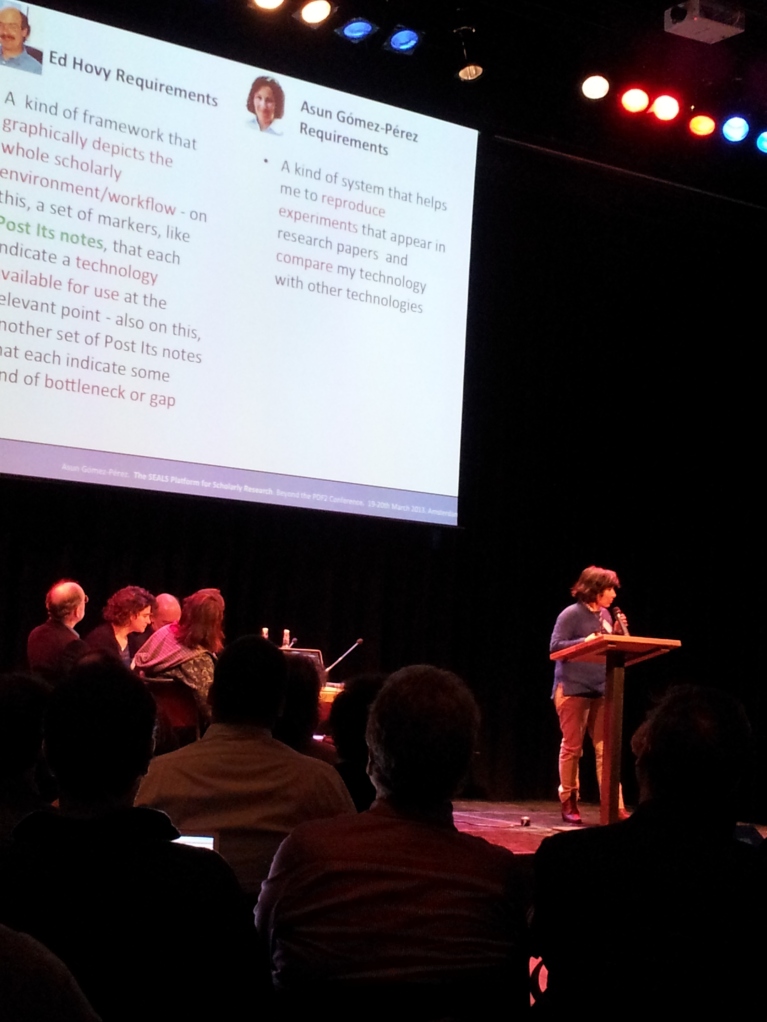

The “Making it happen” session was next. Asunción Gómez Pérez talked about the SEALS evaluation platform, which allows reproducing the different tests of an experiment automatically. Graeme Hirst spoke about usability, the “neglected dimension” and how we are “forced” to use low usable systems like Word and Latex. The gain should be greater than the pain when writing a paper.Rebecca Lawrence followed talking about data review and how to share data: the requirement of a data sharing plan, how things should be done according to standards, where do we find the funding for the previous 2, how we should refuse the papers where data is not accessible, and how a reviewer should have access to all the materials in order to properly review the paper.

The session finished with several short presentations that can be accessed here. Anita de Waard insisted on the idea of the need of a new rewarding system, although no further details were given. I also liked the talk by Melissa Haendel on reproducibility on science, even if she didn’t talk about the role of scientific workflows in reproducibility. Another interesting tool was ORCID, a registry for scholars with author disambiguation. Gully Burns ended the session analyzing how the different parameters change an experiment.

We broke out in different sessions during lunch. I went to the reproducibility, where we shared the different issues that currently exist for trying to store and rerun experiments. However, unlike the data citation group we didn’t come up with a manifesto.

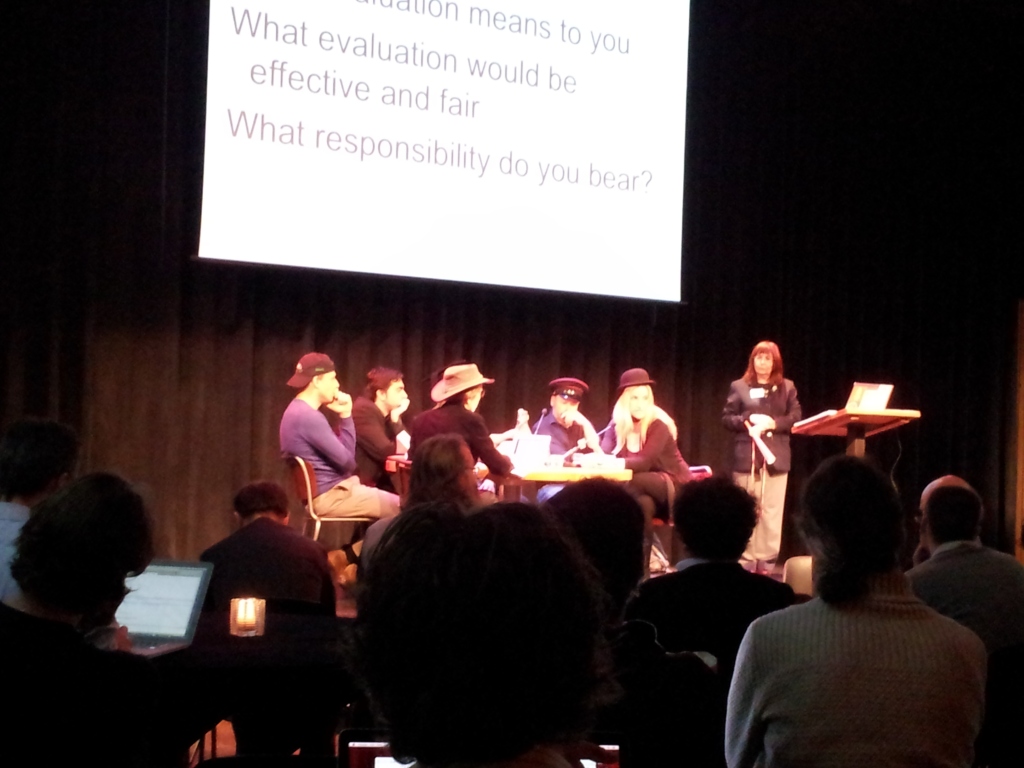

The next session dealt with the new models for evaluation of research, where the organizer, Carole Goble, proposed a little role play. Each of the 6 participants wore a different hat representing the role of their institution. Phil Bourne was the institutional dean (officer hat), Victoria Stodden (with the typical English bureaucratic hat on the right of the picture) represented the public funding agencies, Christine Borgman represented the digital libraries (second hand cowboy hat), Jan Reichelt with the “cool” hat on the left represented the commercial funders; Scott Edmunds representing publisher role with a top hat (unfortunately he wasn’t wearing it in the picture) and Steve Pettifer represented the academic role, (can’t be seen properly on the picture).

The summary of the discussion was as follows, for each role:

- Funding agencies: they are not interested in the evaluation of the academic research. It should be driven by the community.

- The dean: I’ll quote the acting by Phil:

“Oh, we have produced a 200 page report about the possible changes that we could do to the system.

– And what are you going to change?

– Very little!”

It’s events like this one the ones that provide the new ideas.

- Publishers and academia: death to impact factor.

- Commercial funders: code and methods matters. They should be brought as first class citizens (I couldn’t agree more).

- Digital libraries: The standards are problematic. Tools don’t connect, and interoperability is an issue.

The final session, Visions for the future, grouped a set of flash talks from very different people. The most successful ones were given by Carole Goble (winner), who compared the publication of data from a software engineering perspective, and how we could do several releases of the data as happens in software releases: “Don’t publish, release!”; Stian Haklev with his proposal to create an alternative for Google Scholar (I liked his answer to Ed Hovy, when he asked what was new in his proposal: “There is nothing new about this, and that is precisely what is new, that we are just able to make it”); Jeffrey Lancaster with his proposal to change the CSL citation styles and Kaveh Bazargan, who demanded the publishers to release the XML of the papers instead of the pdf. The job of a publisher should be to disseminate content, and not to dictate us how to read the papers. He even did an online demo of a tool that could show the pdf in several different ways depending on the user preferences from the XML.

I also found interesting the proposal by Alejandra Gonzalez-Beltran, who talked about isa-tools, a platform used by pharmaceutical companies for the collection, curation and reuse of datasets; and of course the idea of Olga Giraldo, who wants to provide the means to transform laboratory protocols as nanopublications and provide checklist to organize them properly. Below you can see a picture of the participants in the session:

And that’s all! I think that in summary it was a nice event with a lot of discussion and claims from academia to editors, publishers and funding agencies. Of course, I guess that part of the motivation of the workshop is for them to take ideas on how the system could be changed plus a state of the art of different tools and platforms that they could incorporate to their systems.

Results, next steps?

There was a lot of debate but no session for what the next steps should be. I think this would have been an interesting thing to have, although it is difficult to have it all in a 2-day event. As results, part of the people participating in the breakout sessions wrote the “Data citation manifesto”, which I would really like people to follow in order to give credit for their data (link here, please share!).

Also the idea of an open Google Scholar (as an open alternative such as open Street maps is to Google Maps) looks promising. I hope it gets implemented!

And finally, some personal thoughts. After attending the event I realized that as a computer scientist working to enable reproducibility and reusability of other people’s work, sometimes in my own area we don’t follow the reproducibility principles: papers about tools that are not available after a while, published algorithms without an implementation, , unstable links, etc. I have always tried to include a reference to the code and evaluations done in my work for the reviewers to access it, but I might start using some of the tools shown in the workshop for the sake of preservation.